The Worldâs Proven Choice for Enterprise AI NVIDIA® H100 GPUs NOW AVAILABLE

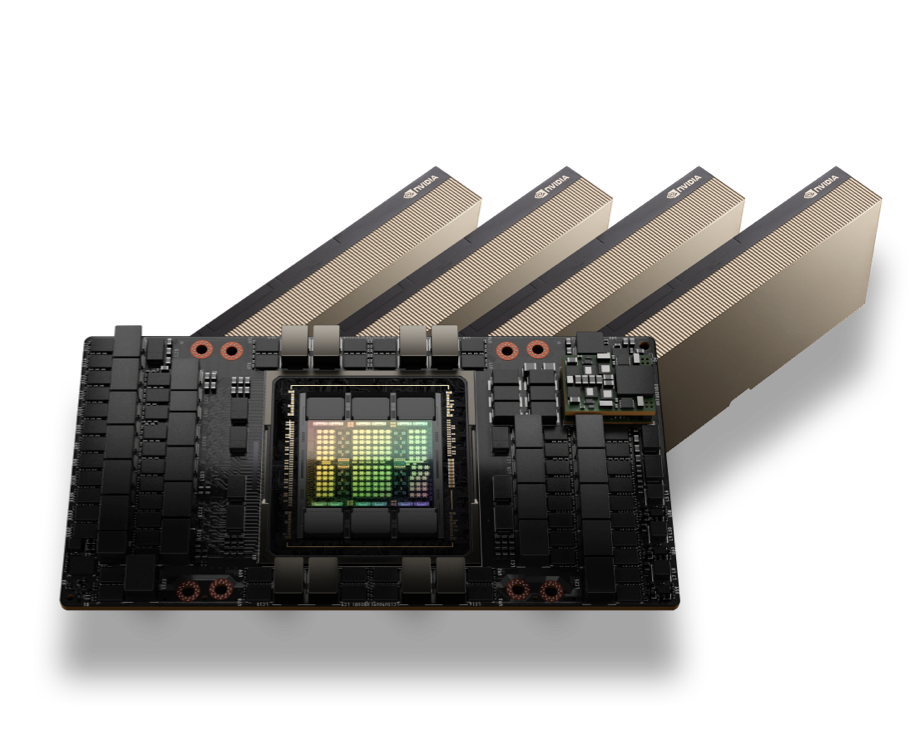

The NVIDIA® H100, powered by the new Hopper architecture, is a flagship GPU offering powerful AI acceleration, big data processing, and high-performance computing (HPC).

With H100 SXM you get:

- Seeking additional computing power and generating AI models

- Enhanced scalability

- High-bandwidth GPU-to-GPU communication

- Optimal performance density

Top Use Cases and Industries

Higher Education and Research

Frees you from node capacity planning and enables on-demand application scaling.

AI-Aided Design for the Manufacturing and Automotive Industries

Deliver resources with custom specifications in seconds. Frees you from node management and allows you to focus on application development.

Health Care and Life Science

Only pay when your instance is running, and there are no hidden fees like data egress or ingress.

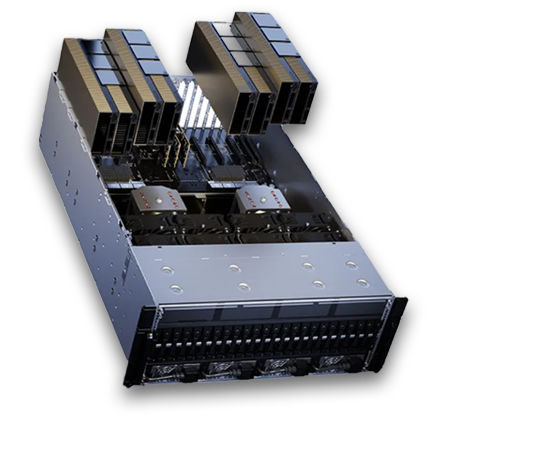

Tech Specs

| Form Factor | H100 SXM | H100 PCle |

|---|---|---|

| GPU memory | 80 GB | 80 GB |

| GPU memory bandwidth | 3.35 TB/s | 2 TB/s |

| Max thermal design Power (TDP) | Up to 700W (configurable) | 300-350W (configurable) |

| Multi-instance GPUs | Up to 7 MIGS @ 10 GB each | |

| Form factor | SXM | PCle

Dual-slot air-cooled |

| interconnect |

NVLink: 900GB/s PCle Gen5 128GB/s |

NVLink: 600GB/s

PCle Gen5: 128GB/s |