The worldâs most powerful GPU

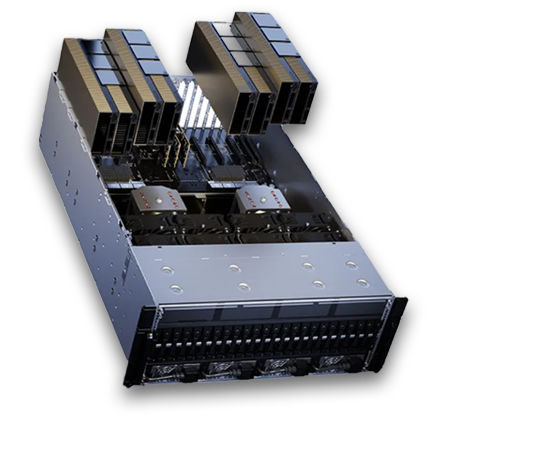

NVIDIA® H200 GPUs NOW AVAILABLE

The NVIDIA® H200 Tensor Core GPU boasts revolutionary performance and memory capabilities that significantly enhance generative artificial intelligence and high-performance computing workloads.

With H200 SXM you get:

- Higher Performance and Larger, Faster Memory

- Unlock Insights With High-Performance LLM Inference

- Supercharge High-Performance Computing

- Reduce Energy and TCO

Top 3 Use Cases

Generative Artificial Intelligence

The H200 GPU is ideal for generative AI apps, such as VR, entertainment, medical imaging, and drug design, which use deep learning to generate new data like images, videos, audio, and text.

High-Performance Computing

The H200 GPU is capable of accelerating high-performance computing tasks in scientific research and industrial applications, such as weather and climate forecasting, drug discovery, and quantum computing.

Deep Learning and Large Language Models

The H200 GPU excels in deep learning, specifically in training and inference of large language models, handling vast numbers of parameters for tasks like text analysis, language translation, and content creation.

Tech Specs

| Form Factor | H200 SXM |

|---|---|

| FP64 | 34 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS |

| FP32 | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS |

| BFLOAT16 Tensor Core | 1,979 TFLOPS |

| FP8 Tensor Core | 3,958 TFLOPS |

| INT8 Tensor Core | 3,958 TFLOPS |

| GPU Memory | 141GB |

| GPU Memory Bandwidth | 4.8TB/s |

| Decoders | 7 NVDEC 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @16.5GB each |

| Form Factor | SXM |

| Interconnect | NVIDIA NVLink: 900GB/s PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX H200 partner and NVIDIA-Certified Systems with 4 or 8 GPUs |

| NVIDIA AI Enterprise | Add-on |